Understanding the dynamics of knowledge communities from their digital traces requires specifically designed tools to turn those raw textual traces into actual heterogeneous knowledge networks.

Especially this first-level processing becomes quite a challenge when it comes to transform free text (like abstract from publications, comments extracted from forums) into a human-comprehensible description. Most of the time lexical analysis is sufficient to obtain a realistic lexical model of the precise topics being addressed in a text, even though certain type of data may require finer treatments or benefit from qualitative analysis (see the study about online forums)

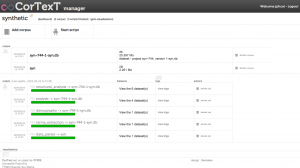

More generally, the production of socio-semantic reconstructions: lexical extraction, heterogeneous maps, statistical analysis, etc. can be realized on any given corpus through scripts I wrote and that can be readily used on this website:

http://manager.cortext.net. The CorText Manager offers a digital space where one can upload her/his own corpora, analyze them and share her/his results. One can read a succinct documentation of the scripts on this dedicated website.